CHAMPAIGN, Ill. — New work by a University of Illinois Urbana-Champaign scholar harnesses the facility of generative synthetic intelligence, utilizing it in tandem with measurement-based care and access-to-care fashions in a simulated case examine, making a novel framework that promotes customized psychological well being remedy, addresses widespread entry boundaries and improves outcomes for numerous people.

Social work professor Cortney VanHook led the analysis, by which he and his co-authors used generative AI to simulate the psychological well being journey of a fictitious shopper named “Marcus Johnson,” a composite of a younger, middle-class Black man with depressive signs who’s navigating the well being care system in Atlanta, Georgia.

In response to the researcher’s prompts, the AI platform created an in depth case examine and remedy plan for the fictional shopper. Based on the non-public particulars that the researchers used within the immediate, the AI platform examined the simulated shopper’s protecting components comparable to his supportive members of the family and his potential boundaries to care ― together with gendered cultural and familial expectations ― alongside together with his considerations about acquiring culturally delicate remedy because of the scarcity of Black male suppliers in his employer-sponsored well being plan’s community.

Real-world simulations allow practitioners to grasp people’ pathways to psychological well being care, widespread entry points and demographic disparities, VanHook mentioned.

Moreover, utilizing a simulated shopper mitigates considerations about breeching affected person privateness legal guidelines, thereby enabling practitioners, trainees and students to discover and refine potential interventions in a low-risk atmosphere, selling extra equitable, responsive and efficient psychological well being techniques, he mentioned.

“What’s distinctive about this work is it’s sensible and it’s evidence-based,” VanHook mentioned. “It goes from simply theorizing to really utilizing AI in psychological well being care. I see this framework making use of to educating students about populations they won’t be accustomed to however will are available in contact with within the area, in addition to its being utilized by supervisors within the area after they’re coaching their students or by clinicians on learn how to perceive and greatest assist shoppers that come to their services.”

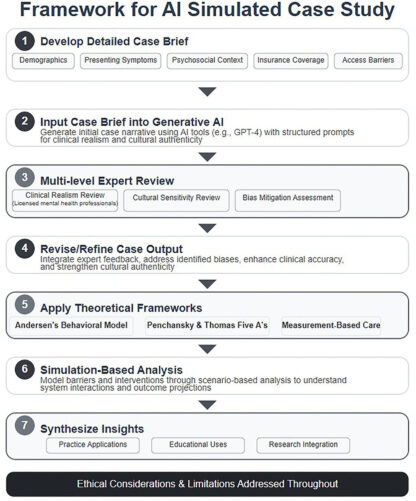

VanHook and co-authors Daniel Abusuampeh of the University of Pittsburgh and Jordan Pollard of the University of Cincinnati prompted the AI platform to use three theoretical, evidence-based frameworks in creating its simulated case examine and remedy plan for the digital shopper.

The AI software program was prompted to make use of Andersen’s Behavioral Model ― a idea concerning the components that decide people’ well being companies utilization ― to look at the non-public, tradition and systemic components that supported or hindered the shopper’s use of psychological well being companies. In addition, the proposed remedy plans included a idea concerning the 5 parts of entry to guage the supply, accessibility, lodging, affordability and acceptability of look after the shopper; together with Measurement Based Care, a medical strategy that applies standardized, dependable measures for ongoing monitoring of the shopper’s signs and functioning.

The workforce used measurement-based care to refine the remedy approaches advisable by AI. To make sure that the AI-generated simulation mirrored real-world medical follow, VanHook and Pollard ― who’re each licensed psychological well being professionals ― reviewed the proposed remedy plan to confirm its medical accuracy and in contrast the case transient towards printed analysis findings.

As all three authors establish as Black males, they confirmed the supplies’ cultural sensitivity and conceptualization of the boundaries that Black males usually face within the U.S. psychological well being system.

“Every inhabitants ― no matter race, age, gender, nationality and ethnicity ― has a singular psychological well being care pathway, and there’s a lot of data on the market in AI to grasp completely different populations and the way they work together with the psychological well being area. AI has the power to account for the advanced boundaries in addition to the facilitators of population-wide psychological well being care,” VanHook mentioned.

The authors acknowledged that the content material generated by AI know-how can be restricted by the info and patterns within the platform’s coaching set, which can not replicate the range, unpredictability or emotional nuances of medical encounters. Likewise, regardless of the evidence-based frameworks that had been utilized within the undertaking, VanHook mentioned these don’t tackle all the systemic and structural boundaries skilled by Black males or seize each social, cultural or particular person issue that influences shoppers’ care.

Nonetheless, the workforce maintained within the paper, which was printed within the journal Frontiers in Health Services, that generative AI holds important promise for bettering entry, cultural competence and shopper outcomes in psychological well being care when built-in with evidence-based fashions.

“AI is a prepare that’s already in movement, and it’s selecting up pace. So, the query is: How can we use this wonderful instrument to enhance psychological well being care for a lot of populations? My hope is that it’s used within the area, as a instrument for instructing and inside increased order administration and administration in the case of psychological well being companies,” VanHook mentioned.

In August, Illinois Gov. JB Pritzker signed a brand new regulation ― The Wellness and Oversight for Psychological Resources Act ― that limits the usage of AI in psychological well being care “to administrative and supplementary assist companies” by licensed behavioral well being professionals. The new coverage got here in response to studies of youths within the U.S. committing suicide after interactions with AI chatbots.

“The use of AI within the method in our examine complies with the brand new state regulation whether it is used within the means of schooling and medical supervision,” VanHook mentioned. “The measurement-based course of described might blur the traces, so I might urge warning towards its use past schooling and medical supervision functions till we obtain extra steerage from the state.”